Introductie

Sora is een kunstmatige intelligentie (AI) model dat tekst naar video omzet, ontwikkeld door OpenAI. Sora gaat verder dan de functie van een typische tool voor het maken van video’s en is in staat om complexe videoclips van hoge kwaliteit te genereren op basis van eenvoudige tekstbeschrijvingen, stilstaande beelden te animeren, of bestaande video’s uit te breiden en te bewerken. De naam van het model, “Sora”, is afgeleid van het Japanse woord voor “lucht”, gekozen om het “grenzeloze creatieve potentieel” te symboliseren dat het belooft te leveren.

Het doel van OpenAI met Sora is niet alleen om video’s te maken, maar om “AI te leren de fysieke wereld in beweging te begrijpen en te simuleren”. Dit positioneert Sora als een fundamentele stap in de richting van het bouwen van veelzijdige “wereldsimulatoren” die in staat zijn de dynamische wetten van de werkelijkheid te leren en te repliceren.

Baanbrekende Technologie: Hoe Sora Werkt

De indrukwekkende capaciteiten van Sora zijn gebouwd op de basis van twee kerntechnologische concepten:

- Diffusion Transformer (DiT) Architectuur: De kern van Sora is een diffusiemodel gebouwd op een Transformer-architectuur. Een diffusiemodel werkt door te beginnen met willekeurige ruis en dit geleidelijk te verfijnen via meerdere stappen om een samenhangend beeld of video te creëren. Door de traditionele U-Net-architectuur te vervangen door een Transformer, maakt Sora gebruik van de superieure schaalbaarheid van Transformers, waardoor de prestaties voorspelbaar toenemen wanneer het wordt voorzien van meer data en rekenkracht.

- Ruimte-tijd Patches (Spacetime Patches): Dit is een van Sora’s belangrijkste innovaties. In plaats van ruwe videopixels te verwerken, comprimeert Sora de video eerst tot een lager-dimensionale latente ruimte. Vervolgens breekt het deze latente representatie op in “ruimte-tijd patches” – het equivalent van “tokens” in een groot taalmodel. Deze aanpak stelt Sora in staat om video’s en afbeeldingen van verschillende resoluties, duur en beeldverhoudingen te verwerken zonder ze te hoeven bijsnijden of van formaat te veranderen, wat het helpt te leren van meer diverse data en zijn vermogen om lay-outs en kadrering te genereren aanzienlijk verbetert.

Grenzeloze Creatieve Mogelijkheden

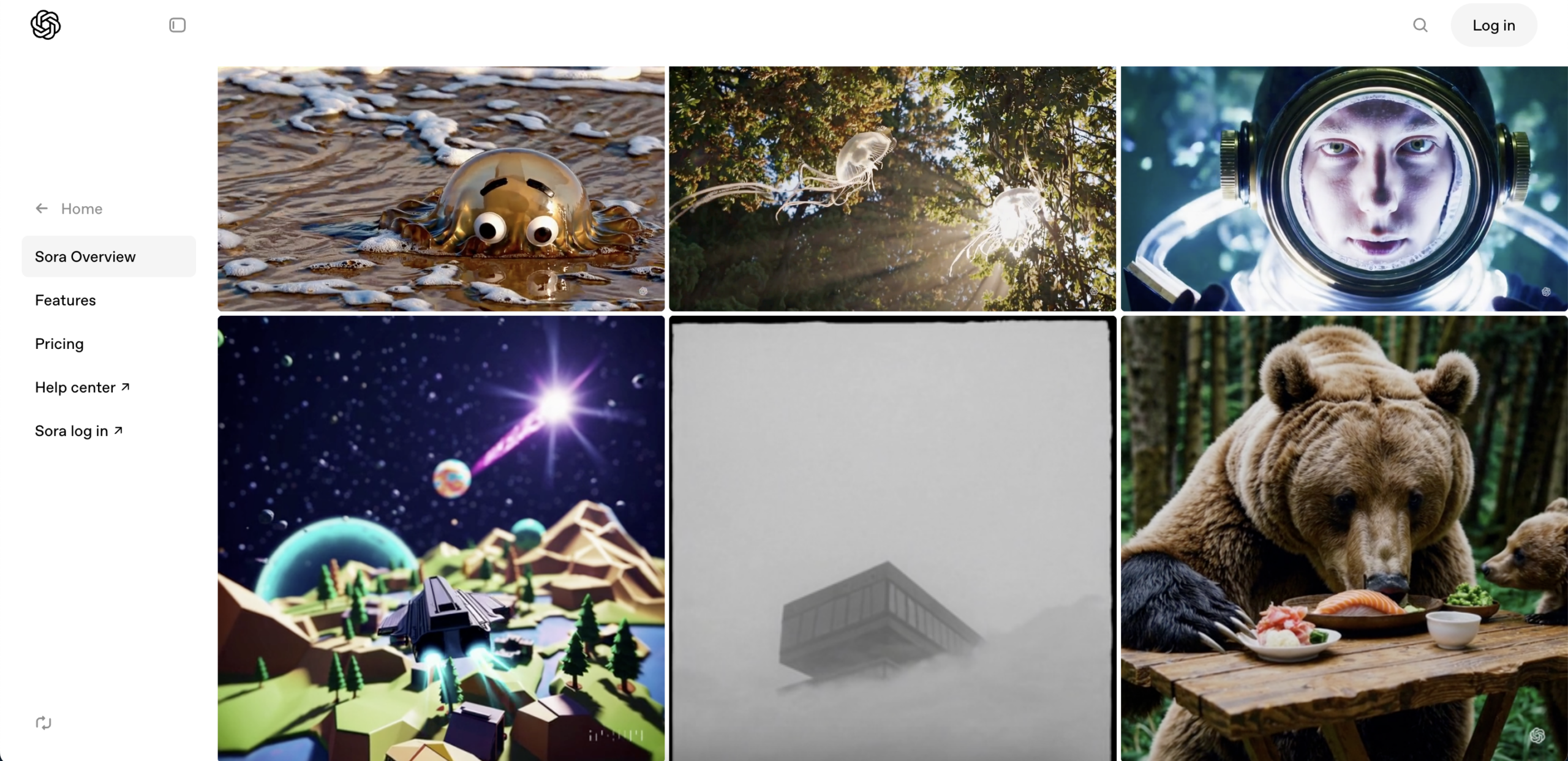

Sora demonstreert een reeks indrukwekkende mogelijkheden, waardoor het een krachtig creatief hulpmiddel is:

- Hoogwaardige videogeneratie: Sora kan video’s tot een minuut lang genereren met hoge beeldkwaliteit, waarbij de consistentie van objecten en context behouden blijft, zelfs als ze op complexe manieren bewegen of tijdelijk worden verduisterd.

- Veelzijdige bewerkingstoolkit: Gebruikers kunnen stilstaande beelden animeren, bestaande video’s bewerken, twee verschillende video’s combineren tot één naadloze clip, of perfecte videoloops creëren.

- Opkomende simulatiemogelijkheden: Sora vertoont vroege tekenen van het simuleren van de fysieke wereld, zoals het modelleren van eenvoudige acties zoals een persoon die een hap neemt uit een hamburger. Het kan ook digitale werelden zoals Minecraft met hoge getrouwheid simuleren.

Huidige Beperkingen

Ondanks dat het revolutionair is, heeft Sora nog steeds aanzienlijke beperkingen, wat aangeeft dat het zich nog in een ontwikkelingsfase bevindt:

- Begrip van Fysica en Causaliteit: Het model heeft moeite om complexe fysieke interacties, zoals het breken van glas, nauwkeurig te simuleren. Het kan ook causale relaties niet altijd consistent begrijpen; bijvoorbeeld, een koekje heeft mogelijk geen bijtafdruk nadat een personage het heeft gegeten.

- Ruimtelijke Logica en Consistentie: Sora kan ruimtelijke details zoals “links” en “rechts” verwarren en genereert soms objecten of personages op een onlogische manier binnen een scène.

- Moeilijk te Controleren: Het geven van commando’s om het exact gewenste resultaat te krijgen kan erg moeilijk zijn, en de output is niet altijd consistent met de gedetailleerde beschrijving.

Impact en Aandachtspunten

De komst van Sora brengt zowel immense kansen als uitdagingen met zich mee:

- Voor de creatieve industrie: Sora democratiseert videoproductie, waardoor individuen hoogwaardige content kunnen creëren zonder grote budgetten. Het vormt echter ook een bedreiging voor banen in videoproductie, visuele effecten en montage.

- Ethische kwesties: De mogelijkheid om hyperrealistische video’s te maken, roept ernstige zorgen op over de creatie van desinformatie, propaganda en deepfakes. Bovendien heeft het gebrek aan transparantie over trainingsdata geleid tot intense debatten over auteursrechtkwesties. OpenAI stelt dat het veiligheidsmaatregelen implementeert, waaronder het labelen van door AI gegenereerde content met C2PA-metadata en het beperken van schadelijke prompts.

Conclusie

Samenvattend is Sora een technologische sprong voorwaarts die een nieuw tijdperk inluidt voor door AI aangedreven videocreatie. Hoewel er nog beperkingen zijn, is het potentieel om de manier waarop we digitale content creëren en ermee omgaan te veranderen onmiskenbaar.